Batch API Guide: Optimize Performance by Combining Multiple API Requests

April 23, 2025 •

6 min read

In today's performance-critical applications, every millisecond counts. Batch APIs have emerged as a crucial optimization strategy, allowing developers to combine multiple API calls into a single HTTP request. This comprehensive guide explores what Batch APIs are, why they matter, and how to implement them effectively in your projects.

Understanding Batch APIs

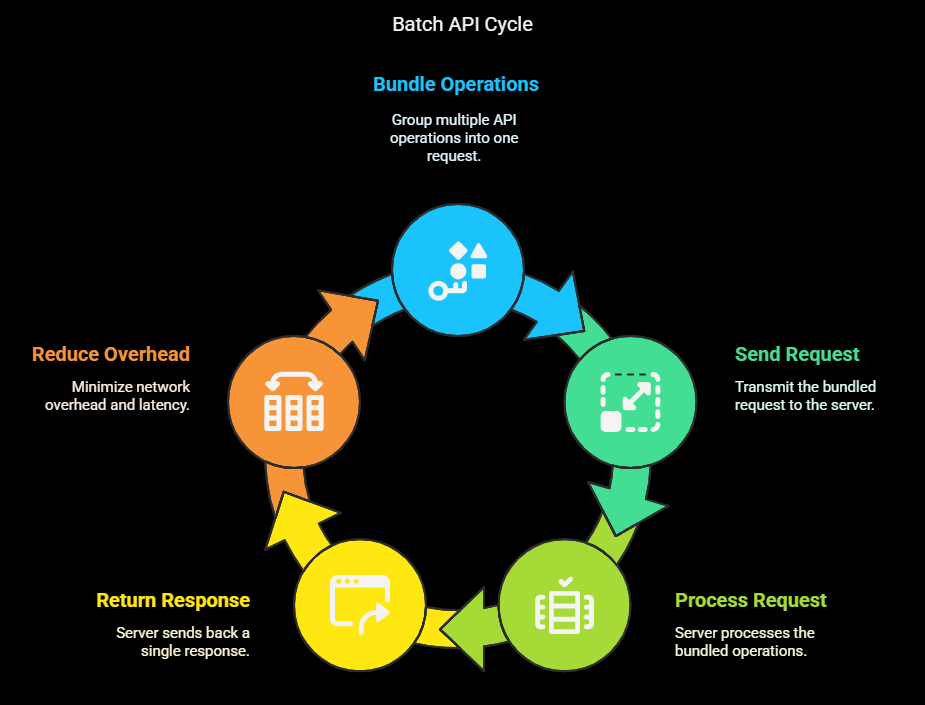

A Batch API enables clients to bundle multiple API operations into a single HTTP request-response cycle. Rather than sending separate requests for each operation, clients can package them together, significantly reducing network overhead and latency.

The Core Concept

The fundamental principle is simple: instead of making N separate HTTP requests, make one request containing N operations. The server processes all operations and returns a consolidated response with results for each operation.

A Simple Example

Request:

POST /batch

{

"requests": [

{ "method": "GET", "path": "/users/1" },

{ "method": "GET", "path": "/users/2" },

{ "method": "POST", "path": "/posts", "body": { "title": "Hello World" } }

]

}Response:

{

"responses": [

{ "status": 200, "body": { "id": 1, "name": "Alice" } },

{ "status": 200, "body": { "id": 2, "name": "Bob" } },

{ "status": 201, "body": { "id": 10, "title": "Hello World" } }

]

}Benefits of Batch APIs

Performance Advantages

- Reduced Network Latency: Eliminates multiple round-trip times between client and server

- Lower TCP/IP Overhead: Reduces connection establishment costs

- Fewer SSL/TLS Handshakes: Minimizes expensive cryptographic operations

- Server-Side Efficiency: Enables optimized processing of related operations

Business Benefits

- Cost Reduction: Lower API consumption costs for services that charge per request

- Improved User Experience: Faster response times lead to better engagement

- Resource Optimization: Reduced load on both client and server resources

- Bandwidth Savings: Less data transmitted for headers and connection management

Ideal Use Cases

Batch APIs shine in scenarios requiring multiple API operations to fulfill a single logical task:

Data-Heavy Applications

- Dashboards: Loading multiple widgets and metrics simultaneously

- Social Media Feeds: Fetching posts, user profiles, and engagement stats

- E-commerce Product Pages: Retrieving product details, pricing, inventory, and recommendations

Mobile Applications

- Initial App Load: Grabbing user data, settings, and content in one request

- Offline Sync: Sending multiple cached updates when reconnecting

- Resource-Constrained Environments: Limiting network usage on mobile networks

Backend Systems

- ETL Processes: Submitting multiple data transformations

- Reporting Systems: Fetching various metrics for report generation

- Microservices Communication: Reducing inter-service call overhead

Implementation Strategies

Server-Side Implementation

Here's a more production-ready example using Node.js and Express:

const express = require("express");

const router = express.Router();

// Batch API endpoint

router.post("/batch", async (req, res) => {

try {

// Validate batch request structure

if (!req.body.requests || !Array.isArray(req.body.requests)) {

return res.status(400).json({ error: "Invalid batch request format" });

}

// Process each request in parallel

const responsePromises = req.body.requests.map(async (request, index) => {

try {

// Validate individual request

if (!request.method || !request.path) {

return { status: 400, body: { error: "Invalid request format" } };

}

// Route the request to appropriate handler

// In production, you would have a proper routing mechanism

const result = await processRequest(request);

return { status: result.status, body: result.data };

} catch (error) {

// Handle individual request errors

return {

status: error.status || 500,

body: { error: error.message || "Internal server error" },

};

}

});

// Wait for all requests to complete

const responses = await Promise.all(responsePromises);

// Return batch response

res.json({ responses });

} catch (error) {

res.status(500).json({ error: "Batch processing failed" });

}

});

// Helper function to process individual requests

async function processRequest(request) {

// Implementation would route to actual handlers based on path and method

// This is a simplified example

return {

status: 200,

data: { message: `Processed ${request.method} ${request.path}` },

};

}

module.exports = router;Client-Side Integration

A simple client-side implementation using JavaScript's fetch API:

async function batchRequest(operations) {

try {

const response = await fetch("/api/batch", {

method: "POST",

headers: { "Content-Type": "application/json" },

body: JSON.stringify({ requests: operations }),

});

if (!response.ok) {

throw new Error("Batch request failed");

}

const result = await response.json();

return result.responses;

} catch (error) {

console.error("Batch request error:", error);

throw error;

}

}

// Usage example

async function loadDashboard() {

const operations = [

{ method: "GET", path: "/api/user/profile" },

{ method: "GET", path: "/api/metrics/summary" },

{ method: "GET", path: "/api/notifications" },

];

const results = await batchRequest(operations);

// Process results

const [profile, metrics, notifications] = results.map((r) => r.body);

// Update UI with the retrieved data

updateDashboard(profile, metrics, notifications);

}Best Practices and Considerations

Error Handling

- Partial Success Handling: Design your system to handle scenarios where some operations succeed while others fail

- Error Propagation: Return clear error codes and messages for each operation

- Retry Strategies: Implement intelligent retry mechanisms for failed operations

Security Considerations

- Authentication: Apply proper authentication checks to each operation within the batch

- Authorization: Verify permissions for each individual operation

- Input Validation: Validate each request in the batch independently

- Rate Limiting: Apply appropriate rate limits to prevent abuse

Performance Optimization

- Request Grouping: Group related requests that might access the same data

- Payload Size Limits: Set reasonable size limits to prevent excessively large requests

- Request Prioritization: Consider implementing priority levels for operations within a batch

- Response Streaming: For large responses, consider streaming results as they become available

Monitoring and Debugging

- Request Tracing: Implement request IDs for each operation within a batch

- Logging: Log detailed information about batch processing

- Performance Metrics: Track execution time for individual operations within a batch

Real-World Examples

Industry Leaders Using Batch APIs

-

Google APIs

- The Google API Client Libraries support batching across multiple Google services

- Uses a multipart MIME format for batch requests

-

Facebook Graph API

- Allows up to 50 operations in a single batch

- Supports cross-referenced requests where later requests can use results from earlier ones

-

Microsoft Graph API

- Supports JSON batching with up to 20 requests per batch

- Implements dependency tracking between requests

-

Salesforce API

- Provides composite resources for batching related operations

- Supports automatic rollback on partial failures

Conclusion

Batch APIs represent a powerful optimization technique for modern applications, particularly those with complex data requirements or resource constraints. By reducing network overhead and server load, they enable faster, more efficient applications.

When properly implemented with attention to security, error handling, and resource management, Batch APIs can significantly improve both performance and user experience. Consider implementing them in your next project where multiple API calls are needed to fulfill user requests.